Meta's AI chip made with TSMC's 5nm process

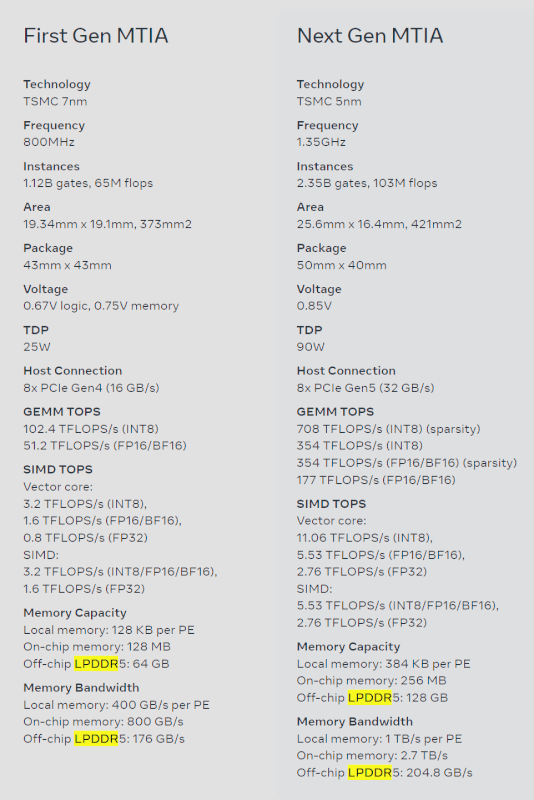

Meta has just given us a sneak peek at their next generation AI chip - MTIA, which is an upgrade over their current chip, MTIA v1. The new MTIA chip is produced on TSMC's newer 5nm process node, where the original MTIA chip was produced on 7nm.

The new Meta Training and Inference Accelerator (MTIA) chip is "fundamentally focused on delivering the right balance of computation, memory bandwidth and memory capacity" that will be used for Meta's unique needs. We've seen the best AI GPUs in the world use HBM memory, with HBM3 used on NVIDIA's Hopper H100 and AMD Instinct MI300 series AI chips, but the Meta uses low-power DRAM memory (LPDDR5) instead server DRAM or LPDDR5 memory.

The social networking giant created its MTIA chip as a first-generation AI inference accelerator that was designed in-house for Meta's AI workload. The company says its deep learning recommendation models "enhance a range of experiences across our products" .

Meta's long-term goal and their journey with the AI inference processor is to deliver the most efficient architecture for Meta's unique workloads. The company adds that as AI workloads become increasingly important to Meta's products and services, the efficiency of their MTIA chips will improve their ability to deliver the best experiences for their users around the globe.

Meta explains on their website for MTIA: "This chip's architecture is fundamentally focused on delivering the right balance of computation, memory bandwidth, and memory capacity for serving ranking and recommendation models. In inference, we should be able to deliver relatively high utilization, even when our batch sizes are relatively small By focusing on providing outsized SRAM capacity, relative to typical GPUs, we can provide high utilization in cases where batch sizes are limited and provide sufficient computation when we have larger amounts of potentially simultaneous work.

This accelerator consists of an 8x8 grid of processing elements (PE). These PEs deliver significantly improved dense compute performance (3.5x over MTIA v1) and sparse compute performance (7x improvement). This comes in part from improvements in the architecture associated with pipelining of sparse computation. It also comes from how we feed the PE grid: we've tripled the size of local PE storage, doubled the size of on-chip SRAM and increased the bandwidth by 3.5X, and doubled the capacity of LPDDR5.

Latest processor - cpu

-

29 Aprprocessor - cpu

-

28 Aprprocessor - cpu

Intel still in trouble

-

26 Aprprocessor - cpu

Nye AMD Strix Point, Strix Halo spec leaks

-

26 Aprprocessor - cpu

TSMC's new COUPE tech ready in 2026

-

26 Aprprocessor - cpu

First NVIDIA DGX H200 for OpenAI

-

25 Aprprocessor - cpu

Qualcomm unveils Snapdragon X Elite

-

25 Aprprocessor - cpu

TSMC's 'A16' chip poised to challenge Intel in 202

-

25 Aprprocessor - cpu

Nvidia is still strong on AI but can be challenged

Most read processor - cpu

Latest processor - cpu

-

29 Aprprocessor - cpu

Intel points to motherboards for instability

-

28 Aprprocessor - cpu

Intel still in trouble

-

26 Aprprocessor - cpu

Nye AMD Strix Point, Strix Halo spec leaks

-

26 Aprprocessor - cpu

TSMC's new COUPE tech ready in 2026

-

26 Aprprocessor - cpu

First NVIDIA DGX H200 for OpenAI

-

25 Aprprocessor - cpu

Qualcomm unveils Snapdragon X Elite

-

25 Aprprocessor - cpu

TSMC's 'A16' chip poised to challenge Intel in 202

-

25 Aprprocessor - cpu

Nvidia is still strong on AI but can be challenged