Apple's new accessibility features

Apple has announced new features coming to iOS and iPadOS focused on making devices more accessible to everyone, including people with disabilities. A main item in the new feature list is Eye Tracking. As the name suggests, this allows an iPhone or iPad to track a user's eyes for input.

By shifting the focus of their gaze, users will be able to navigate through and interact with apps. According to Apple, the feature allows users to mimic functions such as tapping on items, scrolling and other gestures "with just their eyes". Although a similar feature has been available on other devices for a while, it required additional hardware and specialized software.

Eye Tracking is not app-specific and does not require additional hardware. Compatible devices achieve this by using the front-facing camera and "on-device machine learning," according to Apple. The company says that all data used in this feature will be stored on the device and not shared. The feature can prove incredibly useful for people with limited mobility, giving them an easier method of using their device.

Music Haptics causes an iPhone to vibrate in sync with audio played through Apple's Music app. According to Apple, this gives "users who are deaf or hard of hearing a new way to experience music on iPhone". While the feature is currently designed for Apple's Music app, the company will release an API for developers to implement the feature in their apps.

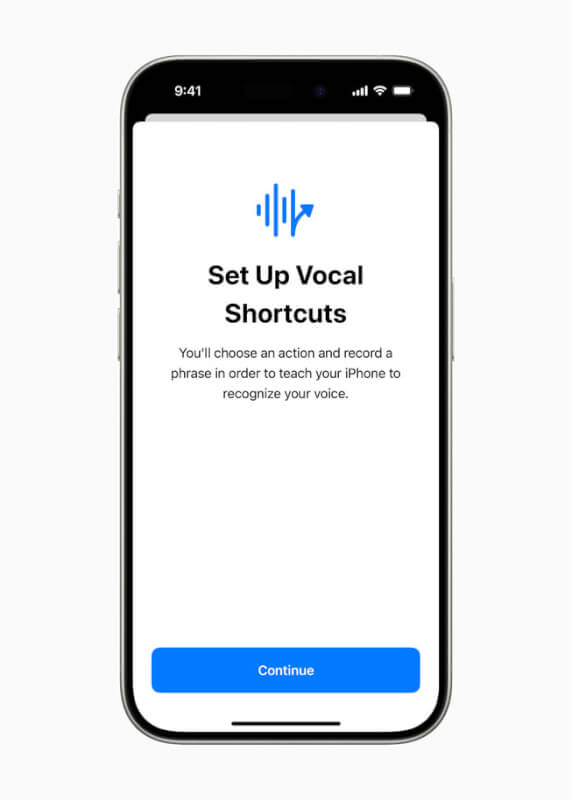

Vocal Shortcuts allow users to customize vocal commands. Using this feature, Siri can understand non-traditional verbal signals or sounds to perform shortcuts and other tasks. Similarly, "Atypical Speech Listening" allows the device to learn how a user speaks, meaning people with speech impairments can train their phone to better understand them.

Vehicle Motion Cues fills the device's screen with moving dots to visually signal the user about surrounding motion, e.g. when driving a car. The feature uses the iPhone or iPad's sensors to animate the dots in sync with movement, either automatically or via a button that can be placed in Control Center. CarPlay also gets some new accessibility features, including Voice Control,

Color filters for the color blind and Sound Recognition, which can help deaf or hard of hearing drivers to recognize environmental sounds such as sirens or alarms. There are also updates to existing accessibility features, which you can read about on Apple's news page.

Latest smartphone

-

18 Febsmartphone

-

07 Jansmartphone

OnePlus 13

-

03 Decsmartphone

OnePlus 13 will launch globally in January 2025

-

25 Octsmartphone

OnePlus launches OxygenOS 15

-

17 Octsmartphone

OxygenOS 15 goes live on October 24

-

20 Sepsmartphone

Apple can be forced to open Siri

-

10 Sepsmartphone

A18 Pro chip in iPhone 16 Pro is 15 percent faster

-

10 Sepsmartphone

Apple's event brought lots of news.

Most read smartphone

Latest smartphone

-

18 Febsmartphone

OnePlus Watch 3 now hits stores

-

07 Jansmartphone

OnePlus 13

-

03 Decsmartphone

OnePlus 13 will launch globally in January 2025

-

25 Octsmartphone

OnePlus launches OxygenOS 15

-

17 Octsmartphone

OxygenOS 15 goes live on October 24

-

20 Sepsmartphone

Apple can be forced to open Siri

-

10 Sepsmartphone

A18 Pro chip in iPhone 16 Pro is 15 percent faster

-

10 Sepsmartphone

Apple's event brought lots of news.