Micron HBM3E shocks SK hynix with NVIDIA H200 AI GPU

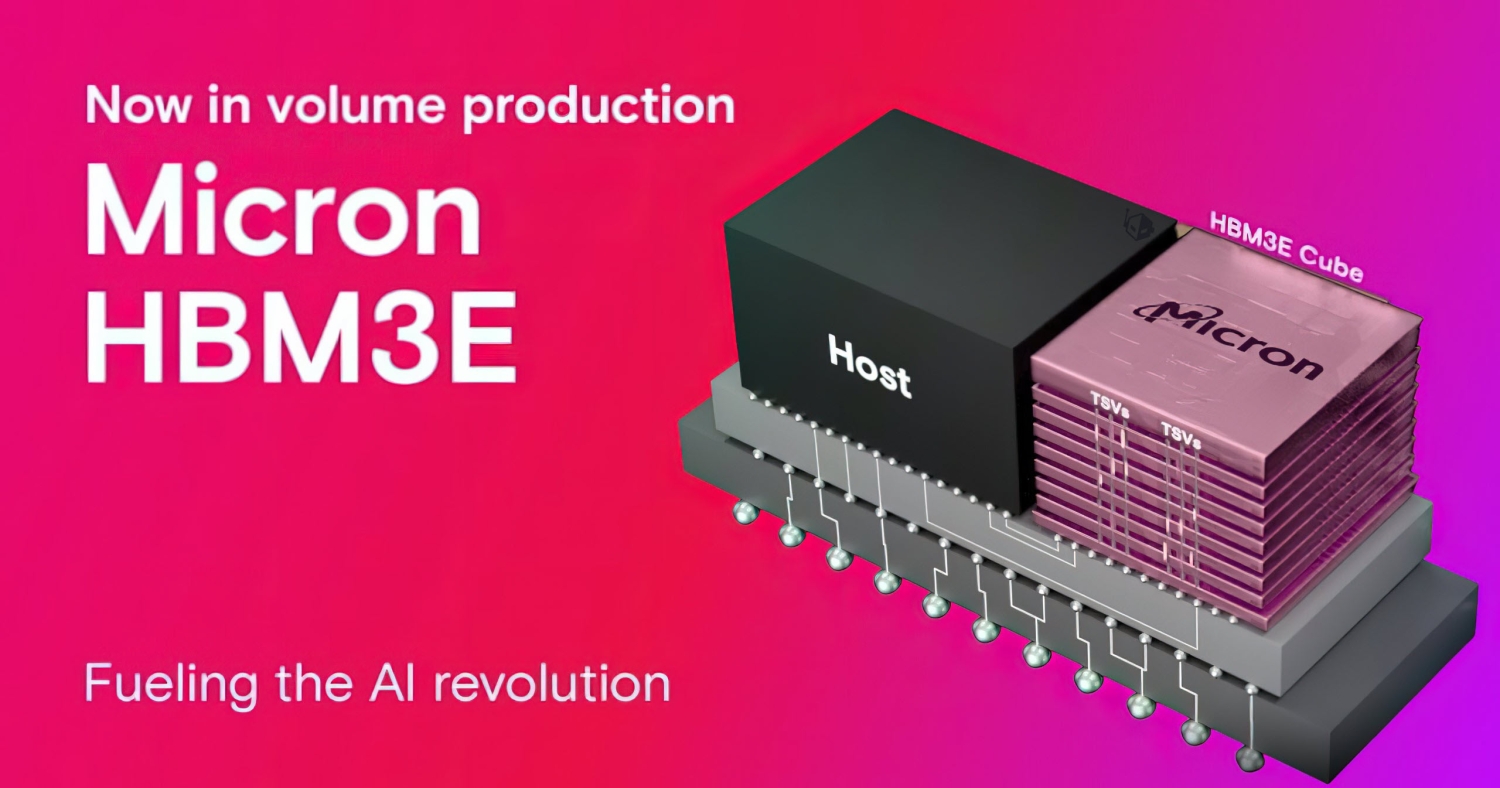

Micron was the first to announce the mass production of its new ultra-fast HBM3E memory in February 2024, placing the company ahead of HBM rivals SK hynix and Samsung. The American memory company announced that it would supply HBM3E memory chips for NVIDIA's upcoming H200 AI GPU, which will have HBM3E memory, as opposed to its predecessor with the H100 AI GPU, which had HBM3 memory.

Micron will manufacture its new HBM3E memory chips on its 1b nanometer DRAM chips, comparable to the 12nm nodes that HBM leader SK Hynix uses on its HBM. According to Korea JoongAng Daily, Micron is "technically ahead" of HBM competitor Samsung, which still uses 1a nanometer technology, which is equivalent to 14nm technology.

Micron's new 24GB 8-Hi HBM3E memory will be the heart of NVIDIA's upcoming H200 AI GPU, and Micron's superiority in the HBM process is an essential part of their deal with NVIDIA. Micron explains their new HBM3E memory:

- Superior performance: With pin speed of over 9.2 gigabits per second (Gb/s), Micron's HBM3E delivers more than 1.2 terabytes per second (TB/s) of memory bandwidth, enabling lightning-fast data access for AI accelerators, supercomputers and data centers.

- Extraordinary Efficiency: The HBM3E leads the industry with ~30% lower power consumption compared to competitive offerings. To support the growing demand and use of AI, the HBM3E offers maximum throughput with the lowest levels of power consumption to improve key data center operating economic metrics.

- Seamless scalability: With 24GB of capacity today, the HBM3E enables data centers to seamlessly scale their AI applications. Whether training massive neural networks or accelerating inference tasks, Micron's solution provides the necessary memory bandwidth.

Chip expert Jeon In-seong and author of "The Future of the Semiconductor Empire" said, "It is proven that Micron's manufacturing method is more advanced than Samsung Electronics because their HBM3E will be made with 1b nanometer technology. Micron will need some more work on packaging, but it should be easier than what they've already achieved with 1b nanometer technology".

Latest ram

-

07 Augram

-

06 Augram

Samsung presents thinnest LPDDR5X DRAM

-

05 Augram

SK hynix focuses on advanced HBM chips

-

31 Julram

SK Hynix launches 60% faster GDDR7

-

28 Junram

SK Hynix accelerates HBM plan

-

17 Junram

AI boosts local chip demand in China

-

17 Junram

Samsung to launch 3D HBM chip service in 2024

-

16 Mayram

MSI and Patriot launch Viper Gaming RAM

Most read ram

Latest ram

-

07 Augram

Nvidia approves Samsung's 8-layer HBM3E chips

-

06 Augram

Samsung presents thinnest LPDDR5X DRAM

-

05 Augram

SK hynix focuses on advanced HBM chips

-

31 Julram

SK Hynix launches 60% faster GDDR7

-

28 Junram

SK Hynix accelerates HBM plan

-

17 Junram

AI boosts local chip demand in China

-

17 Junram

Samsung to launch 3D HBM chip service in 2024

-

16 Mayram

MSI and Patriot launch Viper Gaming RAM