SK Hynix accelerates HBM plan

However, the lead the company has gained over rivals Samsung and Micron will face tougher competition, industry experts told industry sources.

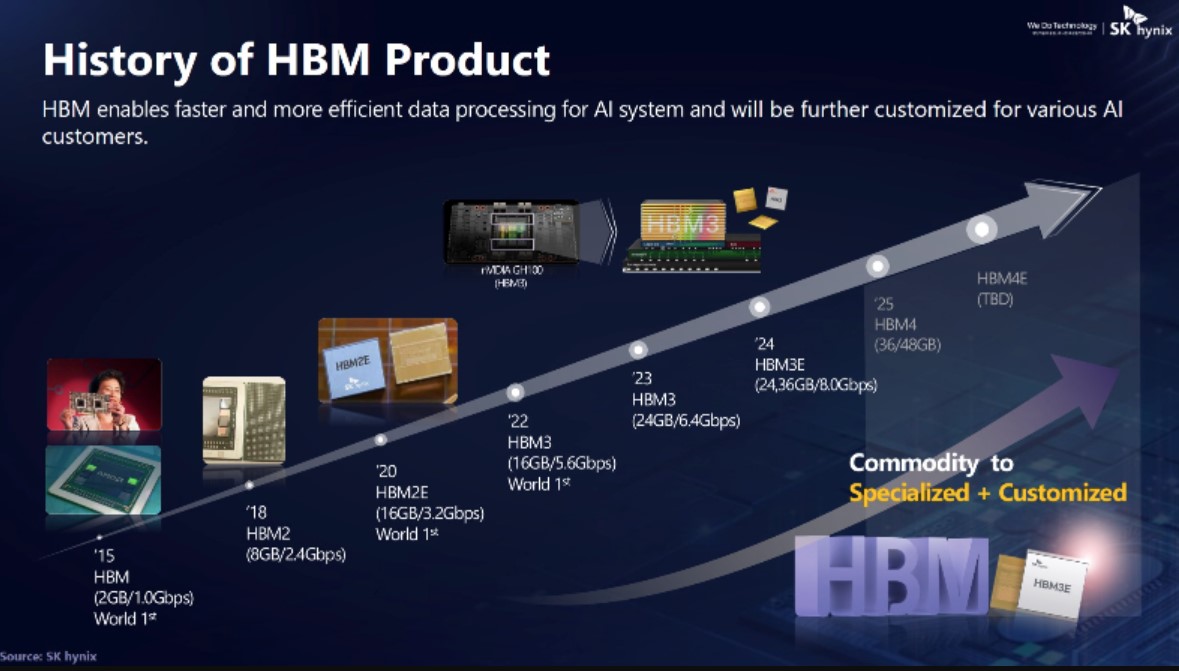

SK Hynix said at an industry event last month that it may be the first to introduce the next-generation HBM4 in 2025. At the event, the company showed a presentation slide of two HBM3E modules packed into Nvidia's Grace Hopper GH200 GPU.

SVP Ilsup Jin, who heads the company's DRAM and NAND technology development, said at ITF World in Antwerp last month that the company's next-generation HBM4 may be available sooner than expected. “HB4M is coming pretty quickly,” Jin said. "It's coming next year."

SK Hynix has the leading market share in HBM, with over 85% in HBM3 and over 70% in total HBM, SemiAnalysis Chief Analyst Dylan Patel told EE Times earlier this year. Competition is expected to get stronger, according to Sri Samavedam, SVP of CMOS technologies at global R&D organization imec.

"SK Hynix were out early and they got ahead," Samavedam told EE Times. "Micron is not far behind. They came out with some really competitive HBM offerings last year and an HBM3E offering this year, too."

In February, Micron announced commercial production of its HBM3E, which will be part of Nvidia's H200 Tensor Core GPUs to ship in the second quarter of 2024. Advanced packaging is critical to the wide adoption of HBM. According to an article in the Korean Economic Newspaper, Samsung will offer 3-dimensional (3D) packaging service for HBM this year, followed by their own HBM4 in 2025.

Samsung has announced that it plans to introduce HBM3E products with 12 layers by the second quarter of 2024. Samsung has promised to strengthen its HBM delivery capabilities and technological competitiveness. The provision of HBM is a potential obstacle to the expansion of AI models and services.

Latest ram

-

16 Octram

-

07 Augram

Nvidia approves Samsung's 8-layer HBM3E chips

-

06 Augram

Samsung presents thinnest LPDDR5X DRAM

-

05 Augram

SK hynix focuses on advanced HBM chips

-

31 Julram

SK Hynix launches 60% faster GDDR7

-

28 Junram

SK Hynix accelerates HBM plan

-

17 Junram

AI boosts local chip demand in China

-

17 Junram

Samsung to launch 3D HBM chip service in 2024

Most read ram

Latest ram

-

16 Octram

Kingston takes the RAM throne in 2024

-

07 Augram

Nvidia approves Samsung's 8-layer HBM3E chips

-

06 Augram

Samsung presents thinnest LPDDR5X DRAM

-

05 Augram

SK hynix focuses on advanced HBM chips

-

31 Julram

SK Hynix launches 60% faster GDDR7

-

28 Junram

SK Hynix accelerates HBM plan

-

17 Junram

AI boosts local chip demand in China

-

17 Junram

Samsung to launch 3D HBM chip service in 2024