SK Hynix starts mass production of HBM3E

SK HYNIX announced on Tuesday that it has begun mass production of next-generation high-speed memory (HBM) chips used in artificial intelligence (AI) chipsets. Initial deliveries will go to Nvidia this month, according to sources. This new type of chip - called HBM3E - is a focal point of intense competition.

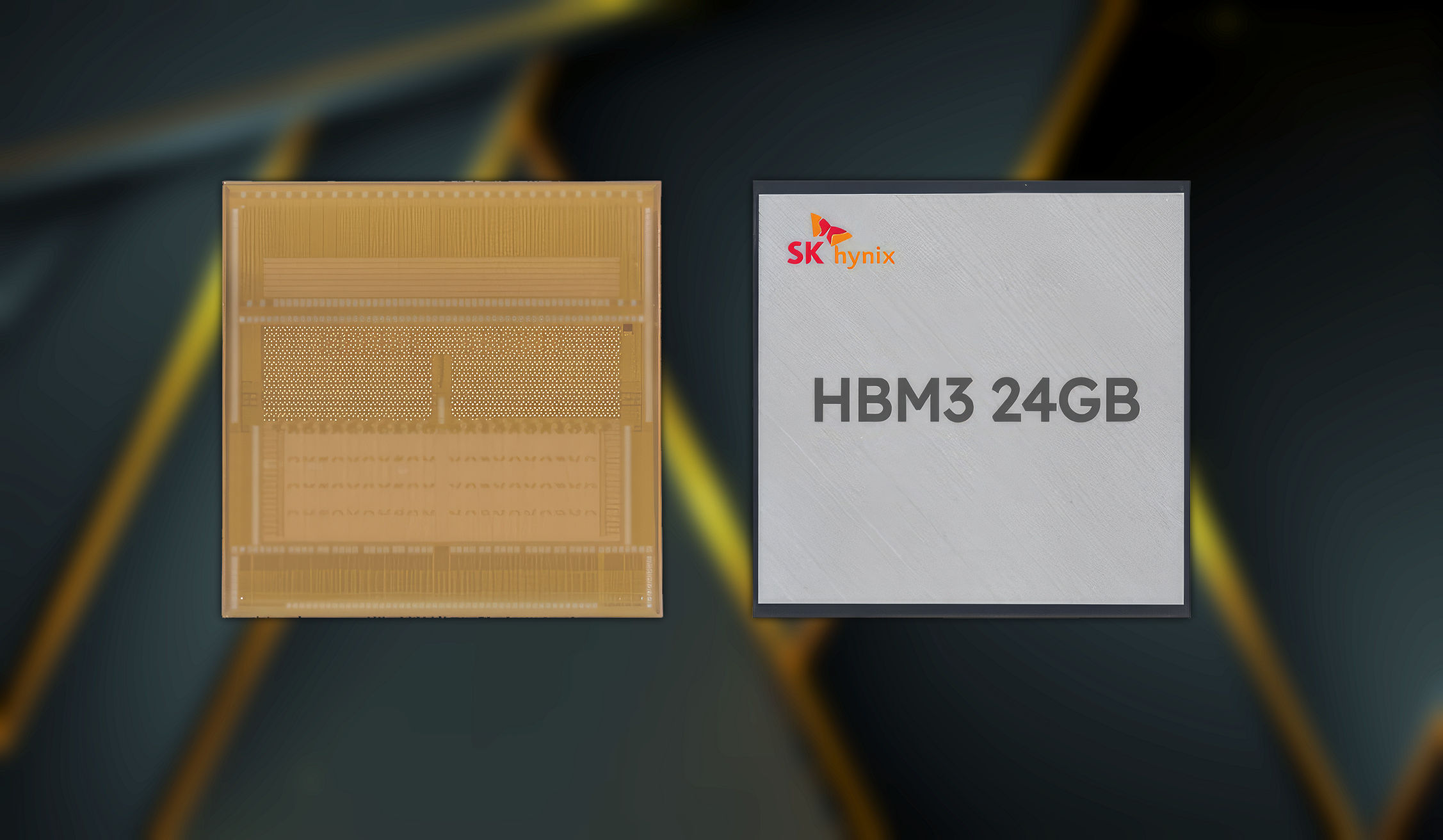

Last month, Micron Technology announced that it had begun mass production of these chips, while Samsung Electronics said it had developed the industry's first 12-stack HBM3E chips. However, SK HYNIX has led the HBM chip market by being the sole supplier of the current version - HBM3 - to Nvidia, which has 80 percent of the market for AI chips.

'The company expects a successful mass production of HBM3E and with our experience...as the industry's first supplier of HBM3, we expect to cement our leadership in the AI memory space,' said SK HYNIX. The new HBM3E chip offers a 10 percent improvement in heat dissipation and can process up to 1.18 terabytes of data per second.

According to analysts, SK HYNIX's HBM capacity is fully booked until 2024 due to the explosive demand for AI chipsets, driving the demand for high-end memory chips used in them.

"The shareholding of SK HYNIX has doubled over the past 12 months on their leading position in HBM chips," said Kim Un-ho, analyst at IBK Investment & Securities. Nvidia on Monday unveiled its latest flagship AI chip, the B200, which is said to be 30 times faster at certain tasks than its predecessor, in a bid to maintain its dominant position in the AI industry."

Latest ram

-

07 Augram

-

06 Augram

Samsung presents thinnest LPDDR5X DRAM

-

05 Augram

SK hynix focuses on advanced HBM chips

-

31 Julram

SK Hynix launches 60% faster GDDR7

-

28 Junram

SK Hynix accelerates HBM plan

-

17 Junram

AI boosts local chip demand in China

-

17 Junram

Samsung to launch 3D HBM chip service in 2024

-

16 Mayram

MSI and Patriot launch Viper Gaming RAM

Most read ram

Latest ram

-

07 Augram

Nvidia approves Samsung's 8-layer HBM3E chips

-

06 Augram

Samsung presents thinnest LPDDR5X DRAM

-

05 Augram

SK hynix focuses on advanced HBM chips

-

31 Julram

SK Hynix launches 60% faster GDDR7

-

28 Junram

SK Hynix accelerates HBM plan

-

17 Junram

AI boosts local chip demand in China

-

17 Junram

Samsung to launch 3D HBM chip service in 2024

-

16 Mayram

MSI and Patriot launch Viper Gaming RAM