Demand for Nvidia chips exceeds supply

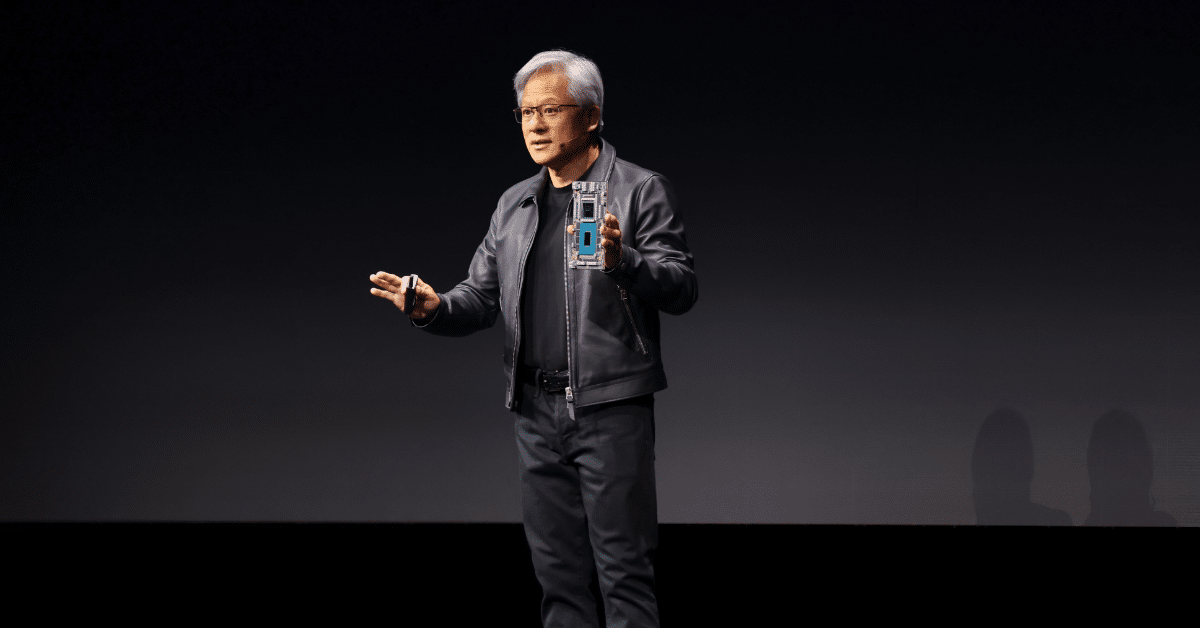

With demand for his company's AI chips soaring and supply limited, Nvidia CEO Jensen Huang was forced to deliver an unusual message on Wednesday. "We allocate fairly. We do our best to allocate fairly and to avoid unnecessary allocation," Huang said in response to a question during Nvidia's fourth-quarter earnings call.

The Nvidia boss was referring to how the company decides who gets their hands on the available GPU chips, the hardware that's driving the AI boom. Tech giants like Meta, Microsoft, Amazon and Alphabet can't get enough of Nvidia's GPUs as they scramble to build data centers that can make popular generative AI services like ChatGPT, Runway AI and Gemini a reality. And that's just one group of customers. A long list of original equipment manufacturers (OEMs) and original design manufacturers (ODMs) are also eager to get the GPUs, not to mention customers in industries such as biology, healthcare, finance, AI development and robotics.

Having to choose between desperate customers may seem like a good problem to have. In fact, Nvidia's revenue of $22 billion in the fourth quarter was more than triple what it was a year ago, and it came in $2 billion above the company's own forecast.

"Accelerated computing and generative AI have reached critical mass. Demand is rising globally across companies, industries and nations," Huang said in a statement accompanying the results, which sent Nvidia shares up more than 8% in after-hours trading. But managing this demand is no mean feat, and in the chip business, getting it wrong can have disastrous consequences for the balance sheet.

That's why Huang needs customers to trust that Nvidia isn't picking favorites, or ordering more than they actually need, or considering alternatives like AMD's upcoming AI chips. Huang sought to reassure listeners of Nvidia's poise by explaining how the company strategically works with cloud service providers -- which account for 40% of Nvidia's data center business -- to ensure they can plan responsibly for their needs.

"Our CSPs have a very clear view of our product roadmap and transitions," Huang said. "And that transparency with our CSPs gives them the confidence of what products to put and where and when... they know the timing to the best of our ability and they know volumes and of course allocation."

The attention that Nvidia's earnings report received on Wednesday cannot be overstated. In an era of booming artificial intelligence and increased corporate demand for its capabilities, Nvidia has emerged as a key player. Its importance is underscored by the fact that it controls over 80% of the global market for the specialized chips critical to powering AI applications, according to Reuters.

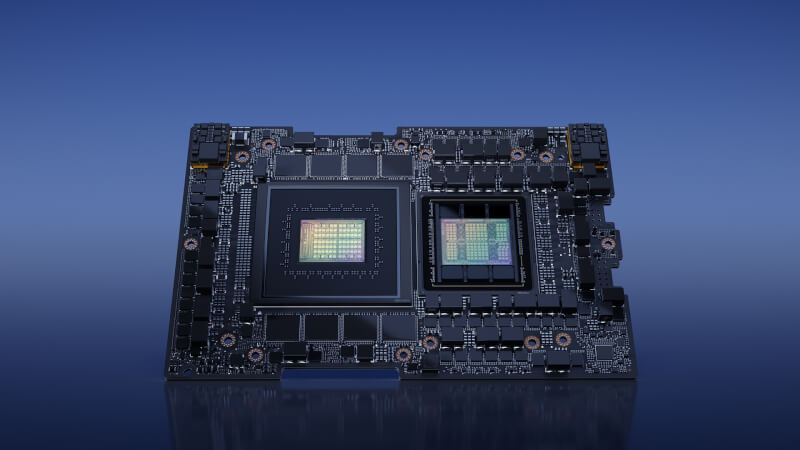

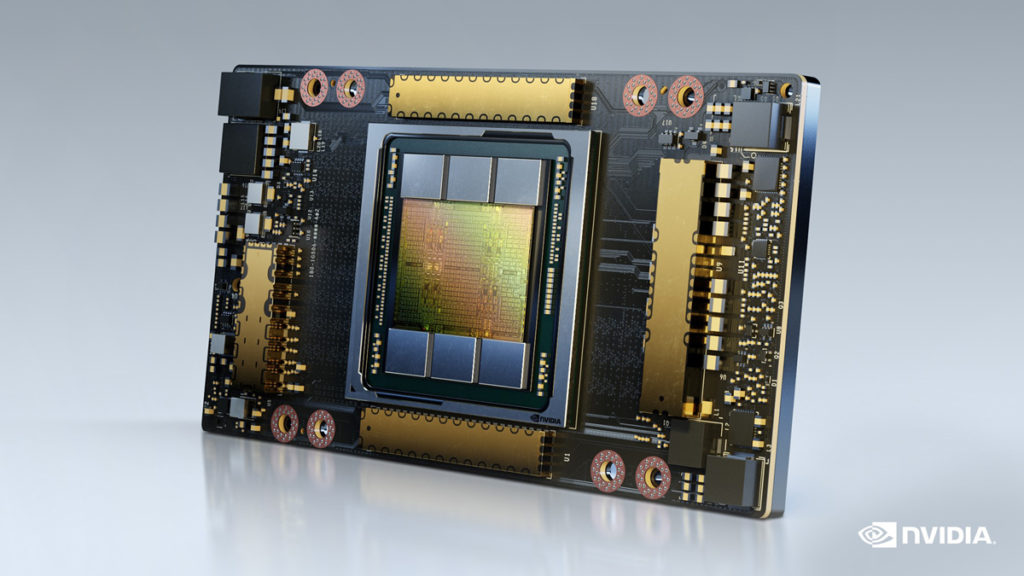

This week, Nvidia's market capitalization rose to $1.8 trillion, making it the fourth-largest company globally based on market capitalization, surpassing both Amazon and Alphabet in the process. When it comes to GPU provisioning, Huang said the issue is more complex than it might seem. Although he praised the supply chain for providing Nvidia with necessary components such as packaging, memory and various other parts, he emphasized the significant size of the GPUs themselves. "You think Nvidia GPUs are like a chip. But the Nvidia Hopper GPU is 35,000 parts. It weighs 70 pounds," Huang said. "These things are really complicated... people call it an AI supercomputer for a good reason. If you ever look in the back of the data center, the systems, the cabling system, the thoughts. It's the most dense, complex cabling system for networking the world has ever had seen."

Latest gadgets

-

23 Maygadgets

-

01 Maygadgets

Swytch launches Swytch Max+ Kit

-

10 Margadgets

DJI AIR 3S

-

03 Margadgets

Razer Wolverine V3 Pro

-

21 Febgadgets

OBSBOT Tiny 2 SE

-

13 Febgadgets

Corsair launches Platform:4

-

17 Jangadgets

Nerdytek Cycon3

-

16 Jangadgets

DJI Launches DJI Flip - A Small Foldable Drone

Most read gadgets

Latest gadgets

-

23 Maygadgets

LaserPecker LP5 Laser Engraver

-

01 Maygadgets

Swytch launches Swytch Max+ Kit

-

10 Margadgets

DJI AIR 3S

-

03 Margadgets

Razer Wolverine V3 Pro

-

21 Febgadgets

OBSBOT Tiny 2 SE

-

13 Febgadgets

Corsair launches Platform:4

-

17 Jangadgets

Nerdytek Cycon3

-

16 Jangadgets

DJI Launches DJI Flip - A Small Foldable Drone