Nvidia presents AI chatbot for PCs

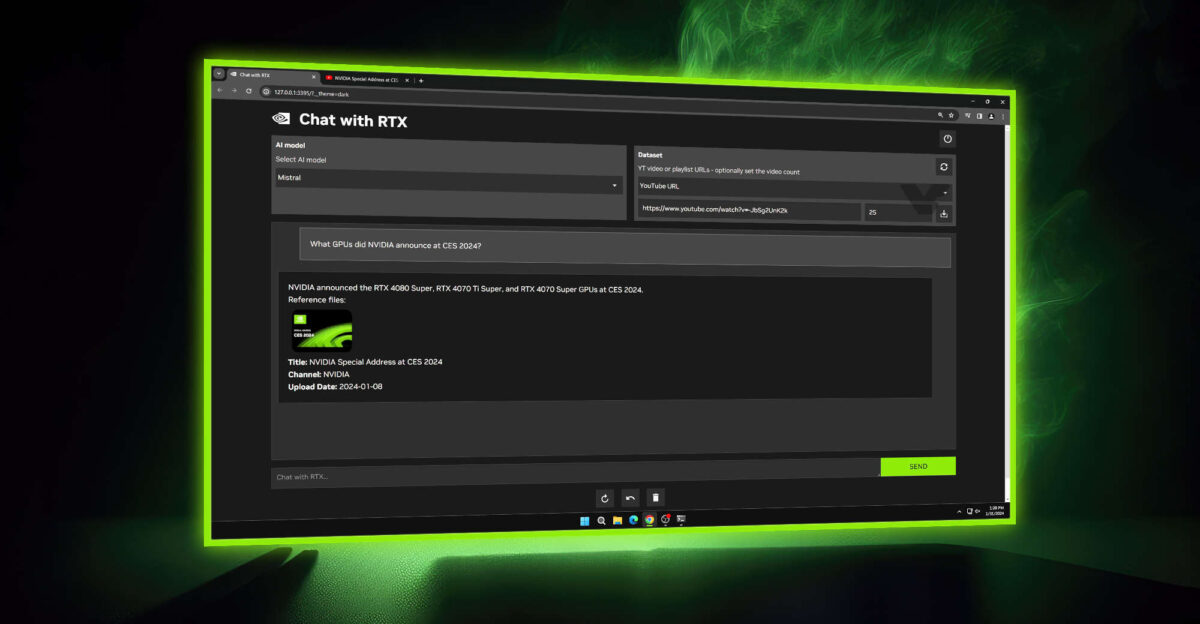

On Tuesday, Nvidia released a technology demo called "Chat with RTX." The software is free to download and allows users to use open source AI store language models - including Mistral or Meta Platforms' Llama 2 - to interface with their files and documents.

After a user points "Chat with RTX" to a folder on their computer containing .txt, .pdf and Microsoft Word documents, they can ask the chatbot questions about information contained in the files.

The Nvidia team explained: "Since Chat with RTX runs locally on Windows RTX computers and workstations, the results presented are fast - and the user's data remains on the device. Instead of relying on cloud-based LLM services, Chat with RTX lets users process sensitive data on a local PC without the need to share it with a third party or have an Internet connection."

Nvidia says the current version of the software is good for informational queries, but not so good for questions that involve reasoning across the entire dataset of files. Chatbot performance also improves on a specific topic when it gets more file content about that topic.

Chat with RTX" requires Windows 10 or Windows 11, along with an Nvidia GeForce RTX 30 Series GPU or 40 Series GPU with at least 8 GB of RAM.

Read more at Nvidia here.

Latest gadgets

-

19 Sepgadgets

-

23 Maygadgets

LaserPecker LP5 Laser Engraver

-

01 Maygadgets

Swytch launches Swytch Max+ Kit

-

10 Margadgets

DJI AIR 3S

-

03 Margadgets

Razer Wolverine V3 Pro

-

21 Febgadgets

OBSBOT Tiny 2 SE

-

13 Febgadgets

Corsair launches Platform:4

-

17 Jangadgets

Nerdytek Cycon3

Most read gadgets

Latest gadgets

-

19 Sepgadgets

DJI launches Mini 5 Pro

-

23 Maygadgets

LaserPecker LP5 Laser Engraver

-

01 Maygadgets

Swytch launches Swytch Max+ Kit

-

10 Margadgets

DJI AIR 3S

-

03 Margadgets

Razer Wolverine V3 Pro

-

21 Febgadgets

OBSBOT Tiny 2 SE

-

13 Febgadgets

Corsair launches Platform:4

-

17 Jangadgets

Nerdytek Cycon3