Google announces new Axion AI CPU

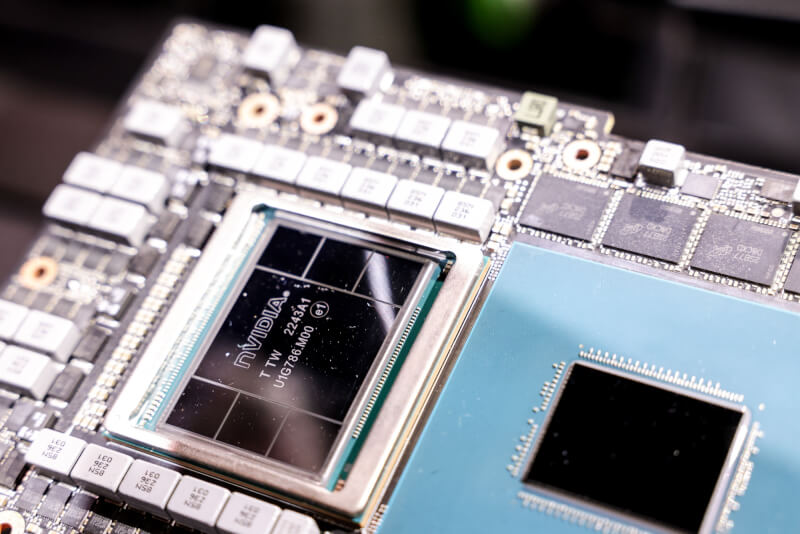

With all the big tech companies investing billions in AI data centers, research and the creation of generative AI models and tools, many are looking to create their own hardware as an alternative to NVIDIA's chips while also competing with AMD, Intel and new AI chip players like Microsoft.

Google is now entering the race with its own arm-based processor designed for the AI market. Like Google's tensor processors (TPUs), which developers can only access through Google Cloud, the arm-based CPU named Axiom will apparently deliver "superior performance compared to x86 chips."

According to Google, Axiom offers 30% better performance than "common Arm chips" and 50% better performance than "current generation x86 chips" produced by Intel and AMD. Google plans to offer the new Axiom chip to Google Cloud customers. The arm-based chip will soon power popular Google services like YouTube Ads. The new chip will run in devices with 8,960 chips to beat the performance of the previous generation.

"We're making it easy for customers to bring their existing workloads to Arm," said Mark Lohmeyer, Google's Cloud vice president and general manager of compute and machine learning infrastructure. "Axiom is built on open standards, but customers using Arm anywhere can easily adopt Axiom without changing the architecture or rewriting their apps."

With chip scarcity, energy concerns, and rising costs of providing and servicing AI, it's no wonder Google is seeking its own hardware-based solution to offer AI cloud services. It's worth noting that while NVIDIA controlled 83% of the AI data center chip market in 2023, Google's tensor processors (TPUs) held most of the remaining market share.

Latest processor - cpu

-

31 Octprocessor - cpu

-

16 Sepprocessor - cpu

AMD Ryzen AI 7 PRO 360 spotted

-

04 Sepprocessor - cpu

Intel scores big AI chip customer

-

04 Sepprocessor - cpu

Exclusively-Intel manufacturing store drawers

-

29 Augprocessor - cpu

Big performance boost for Ryzen CPUs

-

28 Augprocessor - cpu

Intel shares could fall in battle with TSMC and NV

-

28 Augprocessor - cpu

AMD is claimed to have been hacked

-

27 Augprocessor - cpu

Intel presents Lunar Lake, Xeon 6, Guadi 3 chips

Most read processor - cpu

Latest processor - cpu

-

31 Octprocessor - cpu

AMD will launch the Ryzen 7 9800X3D on November 7

-

16 Sepprocessor - cpu

AMD Ryzen AI 7 PRO 360 spotted

-

04 Sepprocessor - cpu

Intel scores big AI chip customer

-

04 Sepprocessor - cpu

Exclusively-Intel manufacturing store drawers

-

29 Augprocessor - cpu

Big performance boost for Ryzen CPUs

-

28 Augprocessor - cpu

Intel shares could fall in battle with TSMC and NV

-

28 Augprocessor - cpu

AMD is claimed to have been hacked

-

27 Augprocessor - cpu

Intel presents Lunar Lake, Xeon 6, Guadi 3 chips